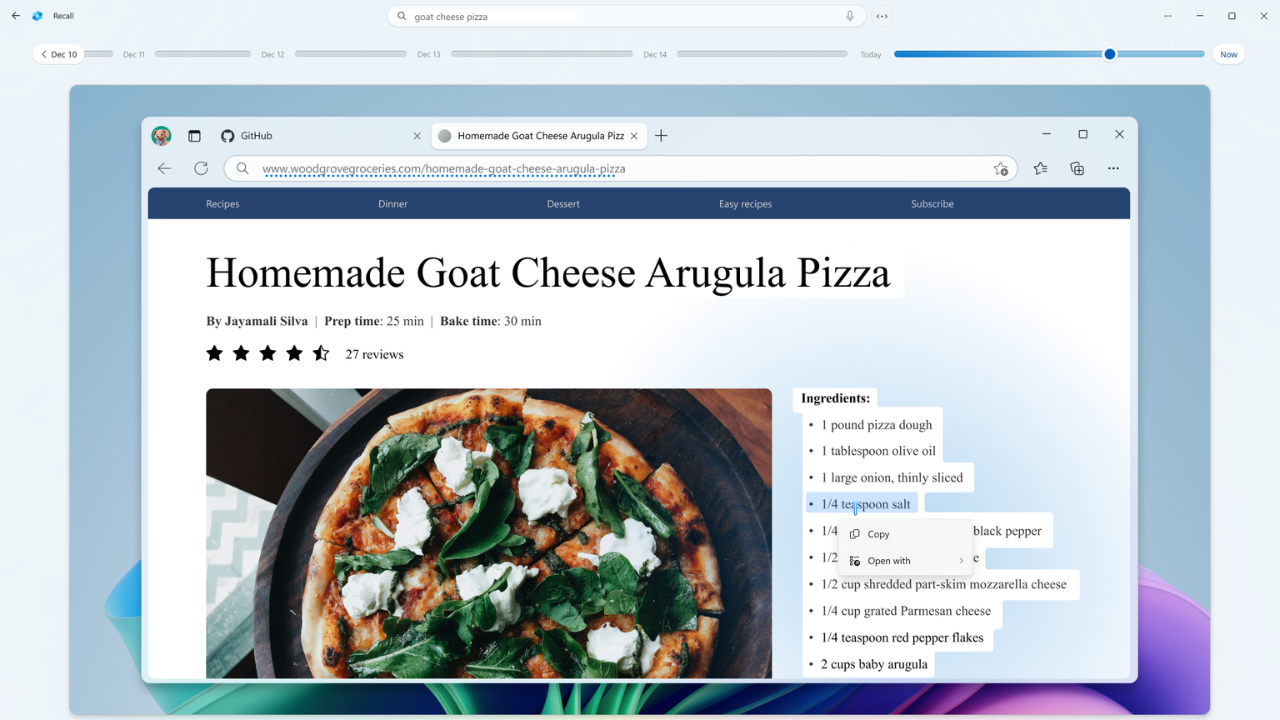

Microsoft has pushed back yet again on its controversial AI feature, Recall, which was initially set to launch in June. The revised deadline of December marks the third delay in its timeline. Recall is designed to take automatic screenshots of users' activities on their PC and allow them to search through their history using natural language. However, since the initial announcement, privacy and security issues have raised significant concerns. Microsoft wants to better assure users of the safety and security of the feature before it rolls out to Windows Insiders for trial.

Backlashes arose regarding the feature and the way Recall would handle user data, causing the delays. Initially, the feature recorded data in an unencrypted format and was enabled without users' consent, causing an immediate outrage for privacy concerns. Still, this time around, Microsoft made some changes to amp up security. Recall will now be entirely optional, meaning that users must actively turn it on. Furthermore, there is encryption to protect screenshots and Windows Hello authentication under which the user requires an identification method for accessing the data.

Additionally, users will also have more control over their information such as uninstalling Recall altogether, as well as excluding certain apps, websites, and private browsing sessions from being recorded. Microsoft will also roll out anti-malware protections and rate-limiting to ensure that the feature isn’t abused in any way.

Still, regardless, there is some scepticism as to how much interest these advancements present for the Recall. Recall was initially viewed as one of the key elements in the Copilot Plus experience, but its rocky runway has put it at a disadvantage in gaining traction with users. So, a December timeline being selected makes us wonder if it will again face a delay and if Microsoft has really worked to make it safe and reliable. However, the future of Recall remains uncertain as users look forward to its debut.